List of GPUs AMD for Artificial Intelligence

OLEKSANDR SYZOVShare

List of GPUs AMD for Artificial Intelligence

Безкоштовна Професійна Консультація з серверного обладнання.

Тел: +38 (067) 819-38-38 / E-mail: server@systemsolutions.com.ua

Конфигуратор Сервера DELL PowerEdge R760

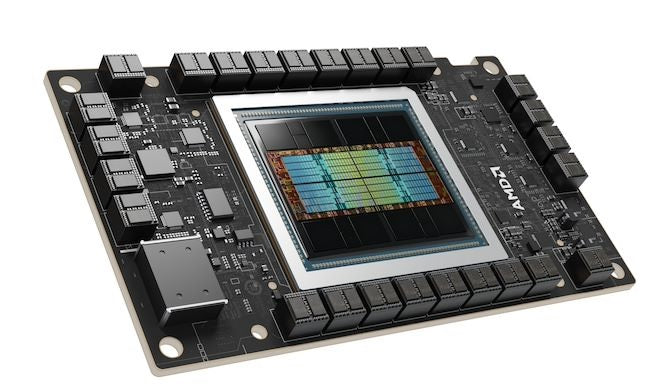

AMD's Instinct series of graphics accelerators are specifically designed for high-performance computing (HPC) and artificial intelligence (AI) workloads, ranging from large-scale model training to efficient inference. They leverage AMD's CDNA architecture, which is optimized for compute rather than traditional graphics rendering.

Here's a list of notable AMD Instinct accelerators for AI, with their key technical characteristics:

1. AMD Instinct MI300 Series (CDNA 3 Architecture)

The MI300 series represents AMD's latest generation of accelerators, featuring a chiplet design and combining CPU and GPU capabilities in some models. They are built for the most demanding AI and HPC tasks.

- AMD Instinct MI325X (GPU)

- Architecture: CDNA 3

- GPU Compute Units: 304 CUs

- Stream Processors: 19,456

- Memory Size: 256 GB HBM3E

- Memory Bandwidth: 6 TB/s

- Peak FP64/FP32 Matrix Performance: 163.4 TFLOPS

- Peak FP16/BF16 Performance: 1307.4 TFLOPS

- Peak FP8 Performance: 2614.9 TFLOPS

- Bus Interface: PCIe Gen5 x16

- Infinity Fabric™ Links: 8

- Maximum TDP/TBP: 1000W

- Intended for: Generative AI (especially large language models), large-scale HPC, AI inference requiring massive memory capacity and bandwidth.

- AMD Instinct MI300X (GPU)

- Architecture: CDNA 3

- GPU Compute Units: 304 CUs

- Stream Processors: 19,456

- Memory Size: 192 GB HBM3

- Memory Bandwidth: 5.3 TB/s

- Peak FP64/FP32 Matrix Performance: 122.6 TFLOPS

- Peak FP16/BF16 Performance: 980.6 TFLOPS

- Peak FP8 Performance: 1961.2 TFLOPS

- Bus Interface: PCIe Gen5 x16 (typically via OAM module)

- Infinity Fabric™ Links: 8

- Maximum TDP/TBP: 750W

- Intended for: Generative AI, large-scale AI training and inference, high-performance computing.

- AMD Instinct MI300A (APU - Accelerated Processing Unit)

- Architecture: CDNA 3 (GPU) + Zen 4 (CPU)

- CPU Cores: 24 "Zen 4" x86 CPU cores

- GPU Compute Units: 228 CUs

- Stream Processors: 14,592

- Memory Size: 128 GB Unified HBM3

- Memory Bandwidth: 5.3 TB/s

- Peak FP64/FP32 Matrix Performance: 122.6 TFLOPS

- Peak FP16/BF16 Performance: 980.6 TFLOPS

- Peak FP8 Performance: 1961.2 TFLOPS

- Bus Interface: PCIe Gen5 x16 (APU SH5 socket)

- Infinity Fabric™ Links: 8

- Maximum TDP/TBP: 550W / 760W (Peak)

- Intended for: HPC workloads that benefit from tight CPU-GPU integration, unified memory, and high bandwidth; also suitable for some AI tasks where CPU interaction is critical.

2. AMD Instinct MI200 Series (CDNA 2 Architecture)

The MI200 series accelerators offered a significant leap in performance for HPC and AI, particularly for double-precision workloads.

- AMD Instinct MI250X (GPU)

- Architecture: CDNA 2

- Compute Units: 220 CUs

- Stream Processors: 14,080

- Memory Size: 128 GB HBM2e

- Memory Bandwidth: Up to 3.2 TB/s

- Peak FP64 Matrix Performance: 95.7 TFLOPS

- Peak FP16/BF16 Performance: 383 TFLOPS

- Peak INT8 Performance: 383 TOPS

- Bus Interface: PCIe 4.0 x16

- Infinity Fabric™ Links: Up to 8

- Intended for: Exascale HPC, large-scale AI training, scientific simulations.

- AMD Instinct MI210 (GPU)

- Architecture: CDNA 2

- Compute Units: 104 CUs

- Stream Processors: 6,656

- Memory Size: 64 GB HBM2e

- Memory Bandwidth: Up to 1.6 TB/s

- Peak FP64 Matrix Performance: 45.3 TFLOPS

- Peak FP16/BF16 Performance: 181 TFLOPS

- Peak INT8 Performance: 181 TOPS

- Bus Interface: PCIe 4.0 x16

- Infinity Fabric™ Links: 3

- Intended for: Mainstream HPC, AI training and inference in PCIe form factors, research, and scientific discovery.

3. AMD Instinct MI100 (CDNA 1 Architecture)

The MI100 was AMD's first accelerator based on the CDNA architecture, introducing new Matrix Cores for enhanced AI performance.

- AMD Instinct MI100 (GPU)

- Architecture: CDNA 1

- Compute Units: 120 CUs

- Stream Processors: 7,680

- Memory Size: 32 GB HBM2

- Memory Bandwidth: Up to 1.2 TB/s

- Peak FP64 Performance: 11.5 TFLOPS

- Peak FP32 Matrix Performance: 46.1 TFLOPS

- Peak FP16 Performance: 184.6 TFLOPS

- Peak BF16 Performance: 92.3 TFLOPS

- Bus Interface: PCIe 4.0 x16

- Infinity Fabric™ Links: 3

- Intended for: HPC workloads, AI training, deep learning, and scientific applications.

4. Older Instinct Accelerators (GCN Architecture)

While less common for new AI deployments, these older models laid the groundwork for AMD's Instinct series.

- AMD Radeon Instinct MI60 (GPU)

- Architecture: Vega 20 (GCN 5.1)

- Stream Processors: 4608

- Memory Size: 32 GB HBM2

- Memory Bandwidth: Up to 1 TB/s

- Peak FP64 Performance: Up to 7.4 TFLOPS

- Peak FP32 Performance: Up to 14.8 TFLOPS

- Bus Interface: PCIe 4.0 x16

- Intended for: Earlier HPC and AI workloads, cloud computing, and rendering.

- AMD Radeon Instinct MI25 (GPU)

- Architecture: Vega 10 (GCN 5.0)

- Stream Processors: 4096

- Memory Size: 16 GB HBM2

- Memory Bandwidth: 436.2 GB/s

- Peak FP32 Performance: 12.29 TFLOPS

- Peak FP16 Performance: 24.58 TFLOPS

- Bus Interface: PCIe 3.0 x16

- Intended for: Early data center AI and HPC applications.

Key AMD Technologies for AI:

- CDNA Architecture: AMD's compute-optimized architecture for data center GPUs, designed specifically for HPC and AI workloads.

- ROCm (Radeon Open Compute platform): An open-source software platform that provides a full software stack for GPU programming, allowing developers to leverage AMD Instinct accelerators for AI and HPC. It's designed to be a flexible alternative to CUDA.

- Infinity Fabric™: AMD's high-bandwidth, low-latency interconnect technology that enables direct communication between GPUs and CPUs, crucial for scaling performance in multi-accelerator systems.

- HBM (High Bandwidth Memory): Integrated directly on the GPU package, HBM provides significantly higher memory bandwidth compared to traditional GDDR memory, which is critical for memory-bound AI workloads.

AMD is continually advancing its Instinct product line to compete in the rapidly evolving AI hardware landscape, focusing on memory capacity, bandwidth, and open software solutions.

Найкращі ціни на офіційні сервери DELL PowerEdge R760 в Україні.

Безкоштовна консультація по телефону +38 (067) 819 38 38

Доступні моделі серверів зі складу у Києві:

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4510 2.4-4.1Ghz 12 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4514Y 2.0-3.4Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 6526Y 2.8-3.9Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 5420+ 2.0-4.1Ghz 28 Cores