Dell PowerEdge XE9785 / XE9785L AI Servers with AMD Instinct MI350

OLEKSANDR SYZOVShare

Dell PowerEdge XE9785 / XE9785L AI Servers with AMD Instinct MI350

Безкоштовна Професійна Консультація з серверного обладнання.

Тел: +38 (067) 819-38-38 / E-mail: server@systemsolutions.com.ua

Конфигуратор Сервера DELL PowerEdge R760

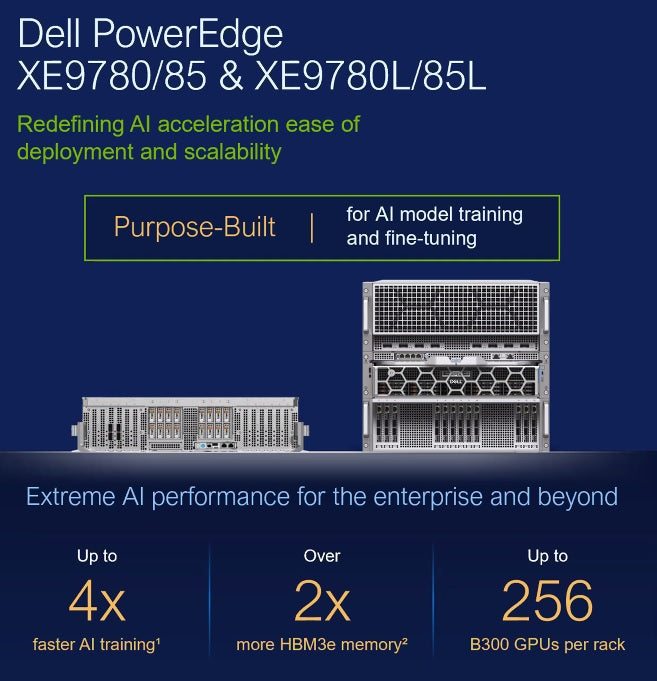

The Dell PowerEdge XE9785 and XE9785L AI Servers, featuring AMD Instinct MI350 series GPUs, are designed for highly demanding Artificial Intelligence (AI) and High-Performance Computing (HPC) workloads.

Here are their technical characteristics and intended tasks:

Dell PowerEdge XE9785 / XE9785L Technical Characteristics:

- GPU Accelerators: They will support the AMD Instinct MI350 series GPUs. Key specifications of the MI350 include:

- Memory: 288GB of HBM3E memory per GPU. This is a significant amount of high-bandwidth memory, crucial for large AI models.

- Memory Bandwidth: Up to 8 TB/s (or ~22.1 TB/s estimated in some sources). This high bandwidth allows for rapid data transfer to and from the GPU, accelerating complex computations.

- Architecture: Built on the 4th Gen AMD CDNA™ architecture (CDNA4).

- Process Node: Expected to be built using 3nm process technology.

- Performance: AMD claims up to 35 times greater inferencing performance over the prior generation platform (MI300X). It also supports low-precision compute formats like FP4 and FP6, which are vital for accelerating generative AI and inference workloads. Estimated FP16 performance is 3,000-4,000 TFLOPS.

- Interconnect: The servers feature 8-way AMD Infinity Fabric interconnects, enabling high-speed communication between the GPUs within the server.

- Compatibility: Designed for drop-in compatibility with existing AMD Instinct MI300 series-based systems, offering an upgrade path.

- CPUs: The servers are powered by AMD EPYC™ 5 series CPUs, which provide powerful host processing capabilities to complement the GPUs.

- Cooling: Available in both liquid-cooled and air-cooled configurations. The liquid-cooled options, like the Dell PowerCool Enclosed Rear Door Heat Exchanger (eRDHx), are designed to handle extremely dense AI and HPC deployments, capturing 100% of IT heat and potentially reducing cooling energy costs significantly. Air cooling capacity can reach up to 80 kW per rack.

- Storage: They can support up to 16 NVMe drives, providing fast local storage for data-intensive AI workloads.

- Networking: Integrated with 200G/400G networking, essential for rapid model training and data transfer across nodes in large clusters.

- Software Stack: Dell supports the AMD ROCm™ open-source software stack, which provides enterprise-grade support for popular AI frameworks like TensorFlow, PyTorch, and other leading AI frameworks. It also allows seamless integration with container platforms like Docker and Kubernetes.

- Scalability: Designed as rack-scale systems, allowing for dense compute deployments.

Intended Tasks and Use Cases:

The Dell PowerEdge XE9785 and XE9785L servers with AMD Instinct MI350 GPUs are specifically intended for:

- Large Language Model (LLM) Training and Fine-tuning: The massive memory capacity (288GB HBM3E per GPU) and high memory bandwidth of the MI350 GPUs make these servers ideal for training and fine-tuning very large language models, which require immense computational power and memory to handle their vast number of parameters.

- AI Inference: With their ability to deliver significantly greater inferencing performance, these servers are perfectly suited for deploying trained AI models for real-time inference across various applications, from generative AI to advanced analytics.

- Generative AI Workloads: The support for low-precision compute formats (FP4, FP6) directly accelerates generative AI processes.

- High-Performance Computing (HPC): Beyond AI, the powerful compute capabilities and high-bandwidth memory also make them excellent for traditional HPC tasks, such as scientific simulations, complex data analysis, and advanced research.

- Machine Learning (ML) Workloads: They are designed to handle demanding ML workloads, including training complex neural networks and processing large datasets.

- Enterprise AI Deployments: These servers are positioned as a core component of Dell's "AI Factory" concept, providing the foundational infrastructure for enterprises to manage the entire AI lifecycle, from data ingestion and model training to deployment and management of AI agents on-premises.

- Multi-modal AI Applications: The combination of powerful GPUs and robust software support makes them suitable for AI models that process multiple data types (e.g., text, images, audio).

In essence, these servers are built to provide leading-edge performance and efficiency for organizations looking to accelerate their AI and HPC initiatives, particularly those involving large and complex models

Найкращі ціни на офіційні сервери DELL PowerEdge R760 в Україні.

Безкоштовна консультація по телефону +38 (067) 819 38 38

Доступні моделі серверів зі складу у Києві:

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4510 2.4-4.1Ghz 12 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4514Y 2.0-3.4Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 6526Y 2.8-3.9Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 5420+ 2.0-4.1Ghz 28 Cores