Dell PowerEdge XE9780 / XE9780L AI Servers with Nvidia HGX B300

OLEKSANDR SYZOVShare

Dell PowerEdge XE9780 / XE9780L AI Servers with Nvidia HGX B300.

Безкоштовна Професійна Консультація з серверного обладнання.

Тел: +38 (067) 819-38-38 / E-mail: server@systemsolutions.com.ua

Конфигуратор Сервера DELL PowerEdge R760

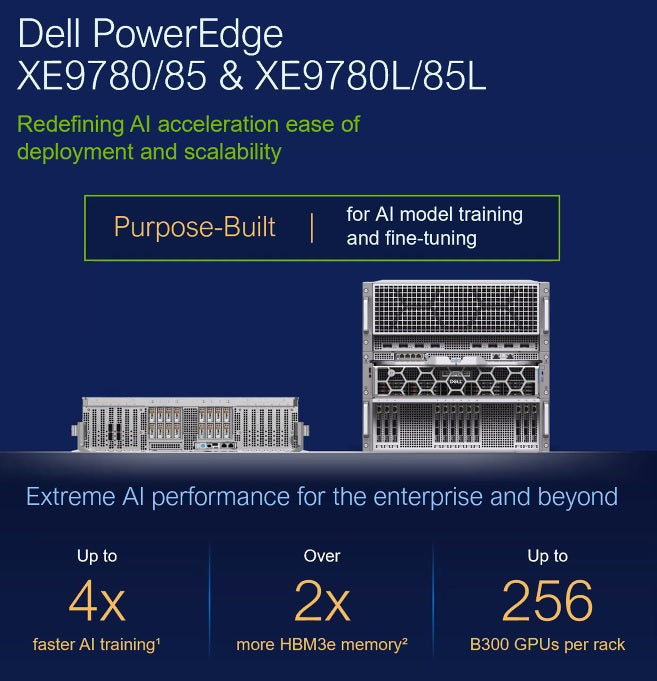

The Dell PowerEdge XE9780 and XE9780L (liquid-cooled) servers are cutting-edge AI servers designed in collaboration with NVIDIA to handle the most demanding AI, machine learning, deep learning, and high-performance computing (HPC) workloads. They are successors to Dell's XE9680 and represent a significant leap in AI infrastructure.

Technical Characteristics:

The Dell PowerEdge XE9780 and XE9780L are built around the NVIDIA HGX B300 platform, which is designed for extreme AI performance. While the exact specifications can vary based on configuration and the specific B300 variant (e.g., B300 NVL16), here's a general overview of their technical characteristics:

Processor:

- Dual 6th Generation Intel Xeon Scalable processors with up to 128 cores per processor.

GPU Acceleration:

- 8 NVIDIA HGX B300 (Blackwell Ultra GPUs), fully interconnected with NVIDIA NVLink technology. This allows for extremely high-bandwidth GPU-to-GPU communication.

- The HGX B300 NVL16 variant, for example, integrates 16 NVIDIA Blackwell Ultra GPUs, emphasizing the number of compute dies connected via NVLink.

- GPU memory: Up to 2.3TB of HBM3e memory per system (with B300 NVL16).

- Significantly higher performance than previous generations for AI training and inference. Dell claims up to 4x faster LLM training with the 8-way NVIDIA HGX B300 compared to its predecessor (XE9680).

Memory:

- 32 DDR5 DIMM slots, supporting up to 4TB max RDIMM.

- Memory speeds up to 6400 MT/s with 6th Gen Intel Xeon Scalable processors

- Supports registered ECC DDR5 DIMMs only.

Storage:

- Front bays support:

- Up to 8 x 2.5-inch NVMe/SAS/SATA SSD drives (max 122.88 TB).

- Up to 16 x E3.S NVMe direct drives (max 122.88 TB).

- Internal Controllers (RAID): PERC H965i (not supported with Intel Gaudi3).

- Internal boot: Boot Optimized Storage Subsystem (NVMe BOSS-N1) with HWRAID 1, 2 x M.2 SSDs.

- Software RAID: S160.

Networking:

- High-density, low-latency switches delivering up to 800 gigabits per second of throughput (e.g., NVIDIA Quantum-X800 InfiniBand or Spectrum-X Ethernet).

- The HGX B300 platform often integrates 8 NVIDIA ConnectX-8 NICs directly into the baseboard for 800 Gb/s node-to-node speeds.

- Embedded NIC: 2 x 1 GbE.

- 1 x OCP 3.0 (x8 PCIe lanes) for network options.

Cooling:

- XE9780: Air-cooled, designed for integration into existing enterprise data centers.

- XE9780L: Liquid-cooled (Direct Liquid Cooling - DLC), optimized for rack-scale deployment and energy efficiency. These models utilize liquid-to-chip cooling technology to reduce thermal strain and optimize power use, capable of operating with warmer water temperatures (32°–36°C) to reduce reliance on energy-intensive chillers.

Form Factor:

- 6U rack server.

- Weight: Up to 114.05 kg (251.44 pounds).

Power Supplies:

- Various options, including 3200W Titanium (277 VAC or 260-400 VDC) and 2800W Titanium (200-240 VAC or 240 VDC) hot-swap redundant.

Other Features:

- PCIe: Up to 10 x16 Gen5 (x16 PCIe) full-height, half-length slots.

- Embedded Management: iDRAC9, iDRAC Direct, iDRAC RESTful API with Redfish.

- Security features: Cryptographically signed firmware, Secure Boot, TPM 2.0.

Intended Tasks and Workloads:

The Dell PowerEdge XE9780 and XE9780L servers with NVIDIA HGX B300 are purpose-built for the most compute-intensive AI and HPC workloads, making them ideal for:

- Generative AI Training and Inference:

- Large Language Model (LLM) training: Their massive GPU memory, high interconnect bandwidth (NVLink), and raw computational power are crucial for training extremely large and complex LLMs.

- Large-scale AI inference: Optimized for real-time inference of large AI models, enabling applications like chatbots, content generation, and intelligent automation.

- Agentic AI: Supporting the development and deployment of AI agents for various tasks.

- Machine Learning and Deep Learning (ML/DL):

- Accelerating training and deployment of deep neural networks across various domains, including computer vision, natural language processing, and recommendation systems.

- Handling extremely large datasets that require significant GPU memory and processing power.

- High-Performance Computing (HPC):

- Complex simulations and modeling in scientific research, engineering, and finance.

- Drug discovery, climate modeling, and other data-intensive scientific applications.

- Robotics and Digital Twins:

- Powering the sophisticated AI models required for real-time control, perception, and decision-making in robotic systems.

- Enabling the creation and simulation of highly detailed digital twins for industrial applications, smart cities, and more.

- Multi-Modal AI Applications:

- Developing AI systems that can process and understand multiple types of data, such as combining images, video, text, and audio.

These servers are key components of Dell's "AI Factory with NVIDIA," a holistic solution designed to simplify and accelerate the entire AI lifecycle for enterprises, from training to deployment at scale. The liquid-cooled "L" variants are particularly suited for dense rack deployments in modern data centers aiming for maximum computational capacity with minimized energy footprint

Найкращі ціни на офіційні сервери DELL PowerEdge R760 в Україні.

Безкоштовна консультація по телефону +38 (067) 819 38 38

Доступні моделі серверів зі складу у Києві:

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4510 2.4-4.1Ghz 12 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Silver 4514Y 2.0-3.4Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 6526Y 2.8-3.9Ghz 16 Cores

Сервер Dell PowerEdge R760 - Intel Xeon Gold 5420+ 2.0-4.1Ghz 28 Cores